Oh, hello there! You may remember me from such BrightonSEO roundups as “On-Stage SEO: My SERP for BrightonSEO 2018”. Well, if that one gave you thrills and/or chills, you’d better wrap up warm — this one’s a doozy.

Round 2 of 2018’s BrightonSEO brace began much as the last one did. I tussled with GWR once again, ending after several hours with a grudging armistice, and I was back basking in the bright Brighton lights. And, albeit to a lesser extent, gasping in the hazy Brighton smog (it’s quite the smokeshow).

This time, I had a proper badge ahead of time, and didn’t need to have my name scrawled in felt tip pen on a blank badge. I was able to march confidently into the building with my properly-printed ID suspended from a Pitchbox lanyard around my neck. And while the layout felt the same, the lineup had my interest decidedly piqued: my schedule was fairly tech-heavy.

When the Seeker team strode from the building roughly 9 hours later with trains to catch (stopping only for a hasty team photo), I had a lot of great stuff to think about. All the talks I had eagerly attended had coalesced in my mind into an engaging whole. The theme? Looking past the minutia of SEO and getting to grips with more well-rounded methods.

Let’s head back into the highlights of those 9 hours to see what fueled this line of thought, and review precisely why I’m calling this BrightonSEO the big-picture SEO showcase.

Round 1: Customer Experience

Customer experience in general is something I’ve always found interesting because it takes a high-level view of the complex decision-making process, so this opening was right up my alley. It didn’t disappoint.

First up was Reviewing Success: A Proactive Approach to Reputation Management from Nathan Sansby. This talk took a general look at the importance of reviews in determining online reputation. While I was already familiar with the influence of social proof, there were some elements I’d never really considered, including the following:

- You can’t just focus on product reviews or reviews on your website. You need to look at the overall view of your brand. If you search for the name of your business plus “reviews”, you can start to understand how people are talking about you in general, not just on the forums you think are most worth your time.

- Consumers (particularly younger ones) are more likely to be swayed by relevant online reviews than by reviews from family members or friends. This means that even a brand with many passionate advocates can suffer greatly at the hands of negative reviews.

- If you manage to win over an unhappy customer, there’s a 55% chance that they’ll shop with you again, and a surprising 25% chance that they’ll actively recommend you. You shouldn’t view any negative review as a lost cause.

Next up was It’s A Stitch Up! Sewing Up Consumer Intent With Keywords from the thoroughly-entertaining Aiden Carroll. With a cartoony presentation style and a light self-effacing tone (greatly underplaying his eloquence, he described himself as “like jam” in that he is “best enjoyed by 5-year-olds”), he was a joy to listen to.

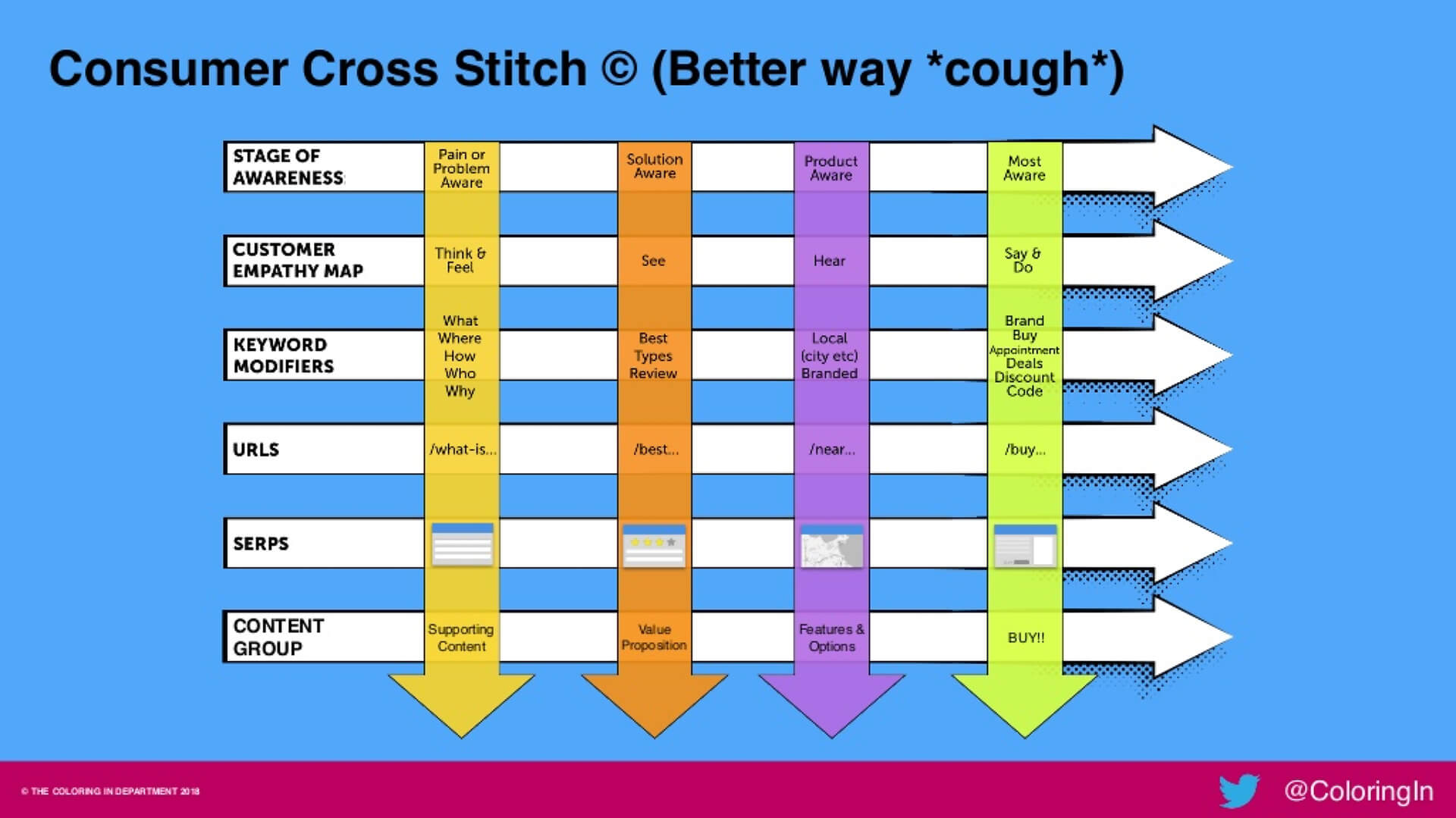

The central thrust of his talk concerned the role of his possibly-patented Consumer Cross Stitch (pictured below) in determining consumer intent. Buyer personas, he stated, are “grand works of fiction” and often useless — we need to go much deeper and think by stage.

Only mildly undermined by the fact (pointed out by some wag at the end) that there’s no actual cross-stitch present. This wouldn’t hold together at all!

The way to figure out how to address consumer intent with your content and UX is to think about the four main stages in the process:

Pain aware -> Solution aware -> Product aware -> Most aware.

Every stage comes with a unique set of challenges, requiring you to think about what the consumer is feeling, what keywords they’re likely to use, what kind of URL they might expect, etc. Anticipate the separate demands and you can adjust your content strategy to pointedly cover all the bases.

Polishing off this round was Found vs. Chosen: Why Earning the Long Click Should be the Goal for Your Brand’s Web Content from the engaging Ronell Smith. This might actually have been my favourite of the three, as it made me think quite differently about how the online exchange of value really works.

Formerly of Moz, Smith works with companies on their content strategies, and made a point of commenting on the silly obsession with “Being number 1” in search even though it doesn’t inherently guarantee success. He pointed out that even if Google believes a particular site warrants the top spot, a negative brand perception can lead people to quickly disregard it and choose something lower on the page.

Instead of doing piecemeal content, he advocated for the production of topic clusters. Create a strong overarching hub for a topic, then write content around niche elements of that topic and link to that content on the hub. The hub becomes massively valuable without needing to be greatly complex in itself, and each piece of content gains more value through being part of a greater whole. This is something that HubSpot is doing very well.

The main point of this talk, though, was that the long click is extremely important. If someone simply happens upon your site while browsing, there’s no inherent meaning to it. They might be on the wrong page, or they might decide that they’re not interested. The long click, though, is where someone actively navigates towards you. It’s purposeful, and thus very lucrative.

And winning the long click isn’t about being the last click in the user journey — it’s mainly about playing a part in it. As long as your brand appears at some point in a user journey and does something useful, it will earn you goodwill, forming positive associations that will benefit you in the long run. That means you don’t need to try to keep people trapped in your content. Try your best to help them, even if it means sending them elsewhere, because if you’re really worth their time then they’ll eventually find their way back to you (and spend more because of it).

Big-Picture Brevity

- Don’t just focus on specific product reviews — look at your entire brand reputation.

- Don’t settle for vague buyer personas — target stage-delineated intent.

- Don’t obsess about the last click — think about adding value to the overall user journey.

Round 2: Mobile & AMP

This round was all about the joys of smartphone browsing, and the importance of being fast. Thankfully, each talk got underway very promptly, so no one felt inclined to leave the room and find a faster presentation.

First up was Mobile Performance Optimization for Marketers from Emily Grossman, who got things off to an alarming start by showing a study demonstrating that slow sites actually stress people out in a major way. Surfing rage is apparently a serious danger. And with speed now a ranking factor for mobile sites, we need to be quick — but we’re not. Why?

- We don’t know how slow our sites actually are. We test on high-end phones and fast networks, and assume that our results are representative of what the average consumer would experience. This makes no sense, so we should either use weaker devices on slower networks or just simulate them using customisable tests.

- We pass the buck. Someone ultimately needs to establish ownership over the speed of a site. If no one else seems inclined to do it, you should step up to the plate and make sure that improvements are made, because they’ll otherwise be overlooked.

- We don’t know what to measure. We check how quickly pages load, but don’t think about how fast they feel. Pages are often mostly-usable before they finish loading.

- We misinterpret analytics. You might look at your metrics and see that your page loading times have drastically dropped. Has your site performance improved somehow? Possibly — or maybe the release of a new iPhone has sped up loading on every site, in which case you haven’t meaningfully gained anything.

- We don’t know how to deal with developers. We tell them what changes to make, but not why, which leaves them disinclined to help. If we give them full explanations of our reasoning, they’ll be more willing to get involved.

Next up was We Made our Website a Progressive Web App and Why You Should Too from the delightfully-named Miracle Inameti-Archibong, and I was very interested to hear this one because I’m all in favour of PWAs.

Representing Erudite, Inameti-Archibong explained the central appeal of the PWA that led her and her team to choose that approach for their website. A Progressive Web App is intended to function just as well on any device, platform, or network, with the “app” persisting even when the connectivity isn’t there. Due to caching, a PWA should load instantly.

To meet standards, a PWA needs each of the following:

- HTTP2 multiplexing.

- A service worker.

- A TTFI/CI speed of <10,000.

- The facility to be added to home screens.

- A responsive design.

- Good SEO and UX fundamentals.

Developing a standard app is complex, whereas a standard website can be migrated to function as a PWA. What’s more, you can submit a PWA to app stores, so from the user’s perspective there won’t be much difference. There are even plugins for turning sites into PWAs, most notably on WordPress.

The only major obstacle is the need for lead Javascript frameworks (older frameworks are much too slow for PWAs), but if you keep your site updated, then it shouldn’t be all that difficult. Overall, unless you have a burning need to have distinct UX designs on mobiles and desktops, there’s no reason not to go with the PWA approach for your site — it takes less time, saves money, and avoids the need for split development teams.

Last but not least, there was AMP Implementation on Non-Standard Content Management Systems from Natalie Mott. I wasn’t overly familiar with the complexities of AMP, so it was fascinating to learn about how this technology has been used (or ignored) thus far.

Mott explained that only 0.1% of sites use AMP, so it hasn’t exactly received an enthusiastic reception. The ecommerce world doesn’t rate it very highly, it’s viewed as strongly associated with Google (more on the general wariness about Google’s methods later), it doesn’t seem like a high priority, and there aren’t many compelling case studies about AMP proving successful. It isn’t even a direct ranking factor — it certainly didn’t protect any sites from losing rankings in the so-called Medic update.

Some industries have adopted it, such as the comparison field: when people are looking to get specific buyer-intent queries answered immediately, it’s obviously important to be maximally optimized for speed. But in general, there doesn’t seem to be much reason to use AMP unless your entire industry is adopting it or you’ve implemented every other improvement you can think of and you’re feverishly looking for some kind of edge.

If you must try AMP, manual implementation is likely to be a pain because you’ll need to set up AMP analytics, go through validation, and sort through the inevitable input errors. WordPress is apparently the most stable platform for it, with various plugins capable of handling it for you.

Overall, this talk was a valuable cautionary tale! It’s easy to get carried away with new technologies and standards, and AMP certainly has the cool-sounding name to whip up some buzz, but it doesn’t seem all that special — particularly in light of the growth of PWAs.

Big-Picture Brevity

- Don’t just look at page loading speed — look at overall site speed across all platforms.

- Don’t split development — create a website flexible enough for any device.

- Don’t fixate on fads — look for general improvements that aren’t flashy but are effective.

Round 3: Javascript and SEO

Two talks, two speakers with fine heads of blue hair. I’m not sure if that was coordinated or simply a neat coincidence. Regardless, I was ready for this round of talks — what I lack in understanding of Javascript, I make up in enthusiasm.

First up was Building search-friendly web apps with JavaScript from Martin Splitt (representing Google, a company that seems to have a little sway in the SEO world). This talk was all about how Google can crawl and index Javascript-rich pages, and the approaches you can take to be more successful.

Following on from the mention of lean Javascript frameworks in the PWA talk, Splitt touched upon how improvements in Javascript frameworks are increasing speed and making page rendering significantly easier. This is definitely a good thing.

However, the rise in single-page apps is presenting difficulties, because they’re harder for crawlers to parse. Not only do they lack the contextual information provided by internal linking structures, but they constitute single points of failure — if some Javascript element of that single page isn’t understood by the crawler, it’ll completely sabotage everything.

Noting that there were over 160 trillion sites on the web in 2016 (meaning there are presumably many more now), Splitt explained that the common assumption that Googlebot crawls, renders and indexes page in one fell swoop is inaccurate. Instead, the first crawl renders HTML, with anything involving Javascript deferred until resources are available to handle it. This can lead to Javascript content being rendered (and potentially indexed) minutes after HTML content, or even days after.

He covered the various approaches to handling Javascript-heavy pages, such as using hybrid rendering — serving some JS content already rendered and leaving the rest to the client — or using HTML5 tools to swap out ugly JS element URLs. In general, though, it seems best to be extremely careful about leaning too heavily on Javascript. Not only is Google’s willingness to render Javascript quite rare, but it actually still uses Chrome 41 from 2015, so you can’t expect an up-to-date understanding of fresh 2018 code.

Next up was SEO for AngularJS from Jamie Alberico, and this was something of a head-scratcher initially because I wasn’t familiar with Angular, but Alberico soon explained what it involved and why it was notable.

Angular Universal is an open-source Javascript framework that puts the weight of execution on the browser, allowing for extremely quick loading times. It essentially functions through templates that subsequently get populated with data. The first load of a template is heavy, but once it’s cached, any subsequent updates are very fast. Since 94.9% of websites use Javascript to some extent, finding a way to deliver JS content at speed is vital.

Alberico noted that ScreamingFrog’s SEO Spider is capable of saving initial HTML and rendered HTML, showing you the difference between what a crawler might see and what a user might see. Using Angular Universal, you can provide server-side rendering, delivering all JS content rendered so that any search engine can easily interpret and index it.

She followed up by noting some alternatives. You can use pre-rendering services, but they will then essentially own you, which isn’t a great situation. You can also use DIY pre-rendering options, but you’ll need to be sure that your hosting can handle them. If your site can viably be handled through Angular Universal, that certainly seems like a strong option.

Big-Picture Brevity

- Don’t avoid Javascript, but don’t overuse it — make sure it works for users and crawlers.

- Don’t put too heavy a load on the client or the server — find a middle-ground solution.

Round 4: Ranking Factors

Heading into the last round of talks, I was feeling very engaged and ready to go. The world of ranking factors is the white whale of the SEO industry — sisyphean as it may be, we can’t help but chase after direct insight into the shadowy calculations that determine our ranking fates.

First up was Making Data Dreams come true: Bridging the Gap between Ranking Factors and SEO Strategy from Svetlana Stankovic and Björn Beth, a jovial and alliterative pair who set out to look at ranking factors through correlations with high-performing sites in specific industries.

- Ecommerce sites have a lot of internal links to products, guides, searches, and locations.

- Finance sites are fast and have small file sizes while maintaining high relevance.

- Health sites are wordy, full of structured lists, and (again) highly relevant.

- Media websites featured many social shares and external links.

- Travel sites are big, slow, image-rich and light on keywords.

Overall, they argued that content creation needs to be an agile process, catered carefully to meet demand and allowed to change over time. Instead of making a page and then abandoning it, you can built it and then add to it piece by piece, fleshing it out with further links and valuable elements.

In addition, a common thread was that internal search is extremely important. Whatever your industry might be, if your website is big enough that someone could miss out on something, be sure to include a robust search function.

Next up was the delicious-sounding Super Practical Nuggets from Google’s Quality Raters’ Guidelines from Marie Haynes. This caught my attention when I looked at the schedule, because the contribution of quality raters to a ranking process that can feel very cold and artificial is interesting to consider.

To determine how successfully its algorithm is working, Google hires an unknown number of quality raters to assess sites and provide feedback. That feedback doesn’t directly alter rankings, but it does feed back into algorithm development — if ratings consistently indicate that websites of a particular type are not good enough to be ranked as highly as they are, the algorithm can be adjusted to weight factors differently.

This is particularly interesting in light of his year’s Medic update. Haynes believes that it was mostly about “safety of users”, playing into the EAT acronym stressed at several times in the rating guidelines: Expertise, Authority, and Trust. Through its quality raters, Google is steadily getting better at understanding the elements and functions that make a site feel trustworthy.

Her suggestions for anyone looking to get a medical site to rank well were the following:

- Get links and mentions on authoritative sites. Google knows which sites truly matter in an industry, and one link from such a site will do more than a hundred links from others.

- Exhibit conventional authority on the topic. Make authorship very clear, including qualifications and experience — if you need to, bring in an expert to validate.

- Support all of your medical claims with science, citing references whenever relevant. Medical content lacking citations is likely to be considered unsafe.

Lastly, we had the fascinating Video ranking factors in YouTube from Luke Sherran. I’d actually never given much thought to how YouTube ranks content, so this had exactly the kind of hook needed to keep my energy level up after a long day. Right off the bat, Sherran astutely noted that YouTube is essentially the world’s second-biggest search engine. So much of our general online activity is influenced by the YouTube content we encounter, yet it’s easy to overlook that because we don’t think of it that way.

Breaking down the sources of YouTube traffic, he identified seeding (external link referrals) as making up 5-10% of YouTube views, and organic search as providing 20-40% of views. Surprisingly, related videos make up even more, and homepage suggestions are enormously influential.

The average YouTube session is more likely to be pushed along by the YouTube algorithm than it is to be self-directed. Apparently there were over 2000 experimental changes to YouTube in 2017, which gives you some indication of how sophisticated the algorithm is becoming (relying heavily on machine learning, of course).

As for specific ranking factors, Sherran identified view velocity as playing a major role. Achieve a great enough view velocity after putting your video live, and it will trigger YouTube to extend the reach of your content through recommendations and suggestions (with exponential results).

Metadata isn’t very important, but does play a small role in best practices. Captions are worth having, but automatically-generated captions are not 100% accurate, so manually correct them.

To maximise your early views (and achieve strong velocity), you should choose the best times to upload. Go live at the wrong time and your video will be dead in the water. Curiously, thumbnails are extremely important. People will often make video choices based on quick thumbnail impressions, and since each click has the potential to turn into an extended viewing session that makes YouTube money, it’s essential that you turn heads with your thumbnail.

In general, he stressed that you need to have an overall video strategy before you even start filming. Know what your thumbnail will be, how you’ll present your content, and where you’ll fit into the YouTube ecosystem, and you’ll have a good chance of hitting an impressive view velocity and gaining ground through association with other popular videos.

Big-Picture Brevity

- Don’t focus on specific pages — increase the overall value of your entire site.

- Don’t try to game SEO with tricks and tactics — just make your content better.

- Don’t get stuck on Google — think about all search engines.

Round 5: Keynote

There was a palpable buzz in the room when Rand Fishkin (Moz founder, moustache aficionado and presenter extraordinaire) swept onto the stage for the keynote address, and a more awkward and amusing buzz when he discovered that the images for his presentation weren’t loading. Thankfully, he handled it very supreme grace, and the BrightonSEO team got the issues fixed relatively quickly.

The core argument of Fishkin’s presentation was that Google is too big, too powerful, and no longer deserving of the SEO community’s trust. Through its ongoing efforts to extract information from indexed pages and present it directly on the SERPs, it is following through with its determination to make life easier for the searcher but fundamentally betraying the content creators who rely on clicks from organic traffic.

When he demonstrated that it can take six full-page scrolls in a mobile SERP to find the first organic link, it was certainly disconcerting. Desktop search remains relatively unaffected for the time being, but will that last, or will Google sooner or later feel sufficiently emboldened to start pushing organic links down there as well?

The concluding tone was still optimistic, however. While Google seems to be doing its best to make life harder for the SEO world (and websites in general), it’s still the biggest driver of traffic by a wide margin, and the escalating difficulty of ranking will also serve to more clearly differentiate the top performers from the chasing pack. So it isn’t yet time for SEO agencies to despair — the virtual apocalypse is still some way off.

Big-Picture Brevity

- Don’t lose all hope — good SEO work is still extremely valuable.

Wrapping Up

I enjoyed the first of 2018’s BrightonSEO’s, but the talks in the second instalment were much more in line with my interests, and I’m very pleased that they came together into such a clear overall theme in my mind. It wasn’t something I expected, but I’ll gladly carry it with me.

In some ways, the SEO industry is still trying to fully emerge from the early days of trying to “hack” rankings and find contrived ways to get content to the top, and I feel that the presentations I attended were very forward-thinking in their commitment to holistic SEO — looking to make general improvements (and essentially just create better content) instead of getting stuck on specific factors.

Want to do great SEO work in the future? Think bigger.